SEMESTER 1 Week 9 & 10

Design And Research Ops

Fieldwork Prep

As I neared the completion of my desk research, and am well into laying the foundations of my understanding of current social issues relating to the exponential rise of generative a.i. on the creative industries, I decided to spend these two weeks to prepare for my fieldwork. I had also received feedback on my documentation, and it was found to be subpar, hence I decided to also dedicate these few weeks to it.

Image of: Anonymised shortlisted potential subject experts for the interview

I had initially proposed that this field work be in the form of one-on-one interviews with subject experts (i.e. those who either research or work with generative a.i., or just veterans of the creative industries).

I had also intended to do a follow-up series of interviews with the creative workforce just to see if the opinions matched or not. In my ideal scenario, the insights of these interviews would come to inform the direction of the speculative tool I wanted to design as part of the final outcome for this project. That said, I am also aware that this was quite unlikely and the more likely scenario would be that many of the interviewees would be pro-a.i. with little considerations for the potential implications. Hence, at this point, I am also prepared to design with that potential redirection in mind.

I had also intended to do a follow-up series of interviews with the creative workforce just to see if the opinions matched or not. In my ideal scenario, the insights of these interviews would come to inform the direction of the speculative tool I wanted to design as part of the final outcome for this project. That said, I am also aware that this was quite unlikely and the more likely scenario would be that many of the interviewees would be pro-a.i. with little considerations for the potential implications. Hence, at this point, I am also prepared to design with that potential redirection in mind.

Image of: My scope for the interviews, and a snippet of the discussion guide.

With that said, I spent a good chunk of these two weeks scoping and planning for the first round of interviews. I scouted across various sources such as past discussion and conference panels, LinkedIn, published works and other forms of networking to find a good range of participants whom I felt would be able to bring valuable insight to the interviews. I then blasted out the invites after getting my supervisor–Andreas’s approval, and now I wait.

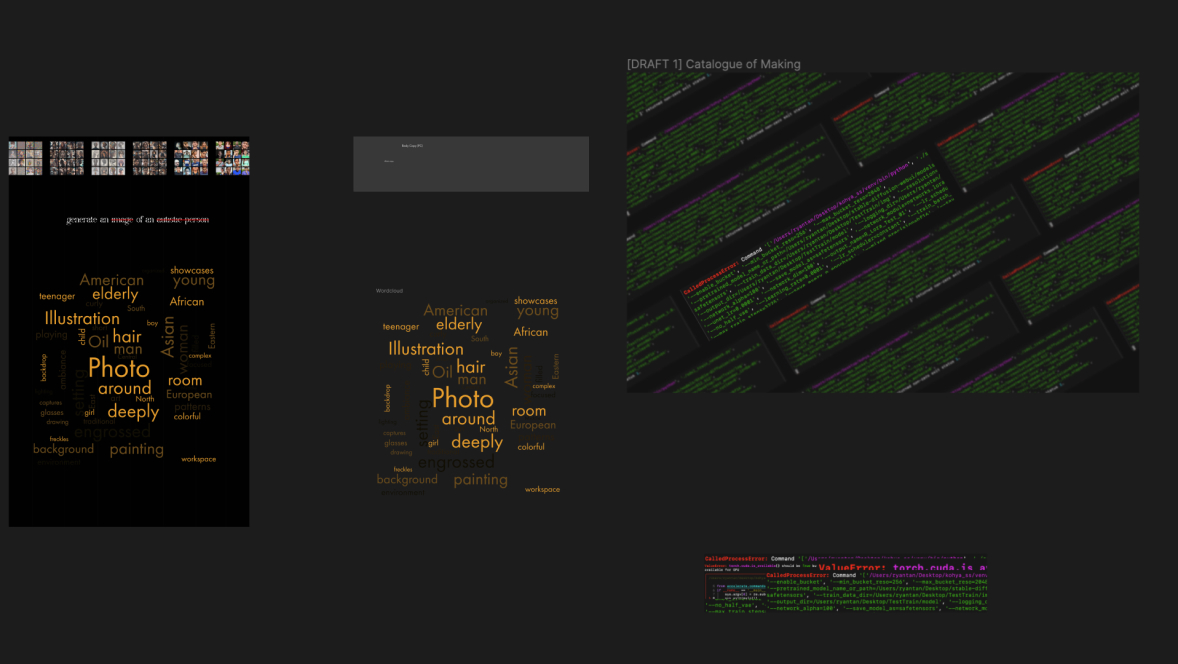

Playing with Generative Tools and Ideating Prototypes

Although I did briefly test out the biases in the current popular generative tools, since I was somewhat stagnant again for these two weeks, I decided to do a simple activity of testing out the biases across Stability’s Dream Studio, and DALL-E 3 to compare against the generations I previously did a couple of weeks back.

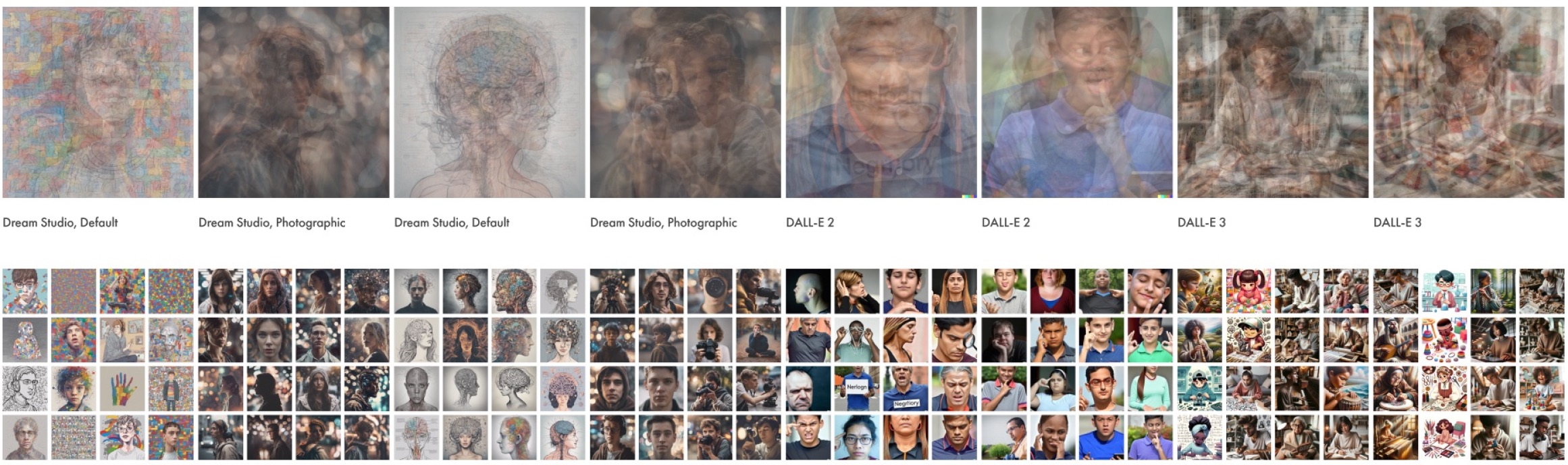

Using the same prompts of “generate an autistic person” and “generate a neurodivergent person”, the results of these were definitely surprising. On one hand, we have DALL-E 2 blatantly showcasing the biases and misrepresentation in the data, then we have Dream studio taking the prompt quite literally.

Using the same prompts of “generate an autistic person” and “generate a neurodivergent person”, the results of these were definitely surprising. On one hand, we have DALL-E 2 blatantly showcasing the biases and misrepresentation in the data, then we have Dream studio taking the prompt quite literally.

Image of: What Dream Studio, Dall-E 2 and 3 think “Autistism” and “Neurodivergence” looks like.

Honestly I’m not even sure what the original data was tagged with to even get such an odd outcome. Then we also get DALL-E 3 which straight up rewrote the prompt to something somewhat generic, I’m guessing as an attempt to not repeat the mistakes of its predecessor. However, some of the hidden biases were still being exposed → I.e. the fact that every generation, despite having a diverse selection of races and ages, all subjects were involved in some kind of creative activity, implying that DALL-E 3 thinks by default that all “neurodivergent” and “autistic” people are inherently creative and perhaps are only capable of doing creative things. A positive stereotype sure, but a stereotype nonetheless.

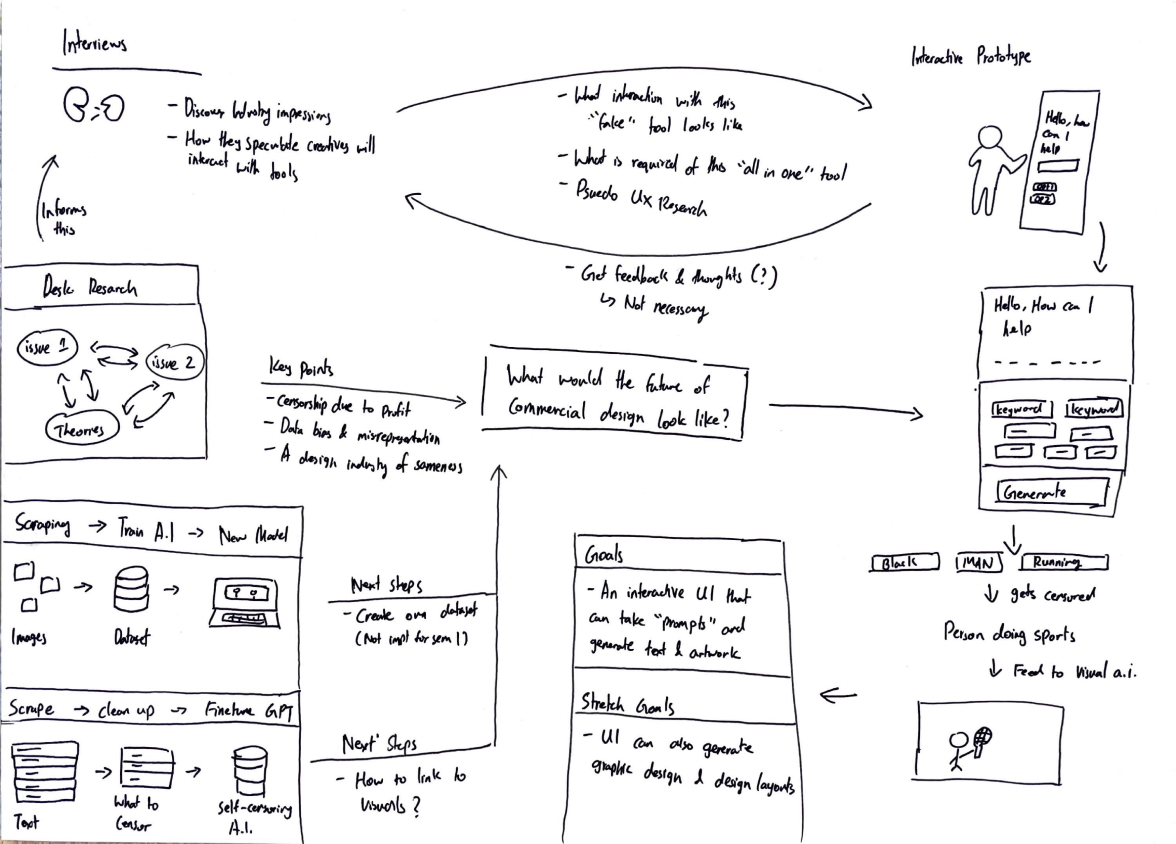

Image of: A sketch that outlines how the different experiments will come together.

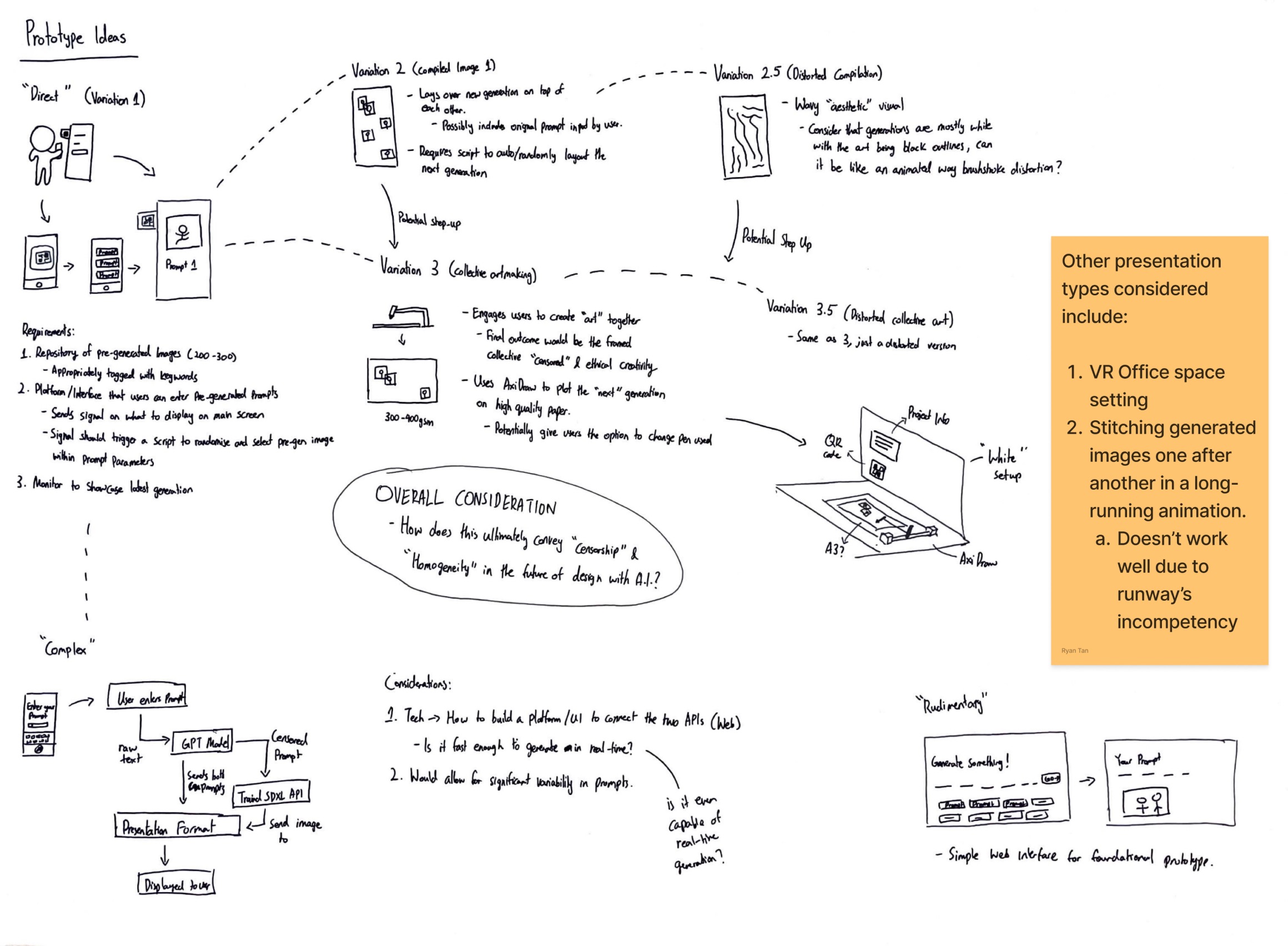

It was also around this point that I started to ideate on how my entire project's final outcome could look like. I began with first laying out the way each component and exploration is supposed to interact with one another. This seem straight forward enough. I am also quite insistent on some kind of installation rather than yet another product solution. Hence, I tried my hand at also sketching out some prototype ideas.

For the most part, my considerations right now are how exactly do I get the text-based a.i. to work, and after, how do I link the visual generator to the text generator. And can I even run them in real-time together. I also liked the idea of using AxiDraw as a means of making the prototype somewhat interactive, as inspired by Sougwen Chung's Drawing Operations, where she build a robot that used a.i. to draw alongside her to make a co-created artwork.

For the most part, my considerations right now are how exactly do I get the text-based a.i. to work, and after, how do I link the visual generator to the text generator. And can I even run them in real-time together. I also liked the idea of using AxiDraw as a means of making the prototype somewhat interactive, as inspired by Sougwen Chung's Drawing Operations, where she build a robot that used a.i. to draw alongside her to make a co-created artwork.

Image of: Ideating my potential final outcomes.

I did also consider using RunwayML to create some kind of constantly running animation where the next person interacting with the final outcome would create a generation, which would then add onto the already long running animation, and the final outcome would be one long animation.

As RunwayML is one of the more popular tools for a.i.-generated video, I decided to give it a try. First, I generated stick people in DALL-E 2, I then attempted to animate them in Runway. However, the results were complete rubbish. I'm fairly certain it is partly due to my limited RunwayML prompting knowledge, but I'm also certain that the tool is only really good for cinematic-esque generations. This is definitely another clear case of data bias.

As RunwayML is one of the more popular tools for a.i.-generated video, I decided to give it a try. First, I generated stick people in DALL-E 2, I then attempted to animate them in Runway. However, the results were complete rubbish. I'm fairly certain it is partly due to my limited RunwayML prompting knowledge, but I'm also certain that the tool is only really good for cinematic-esque generations. This is definitely another clear case of data bias.

Video of: Attempting to make an A.I.-generated animation.

Documentation Revamp

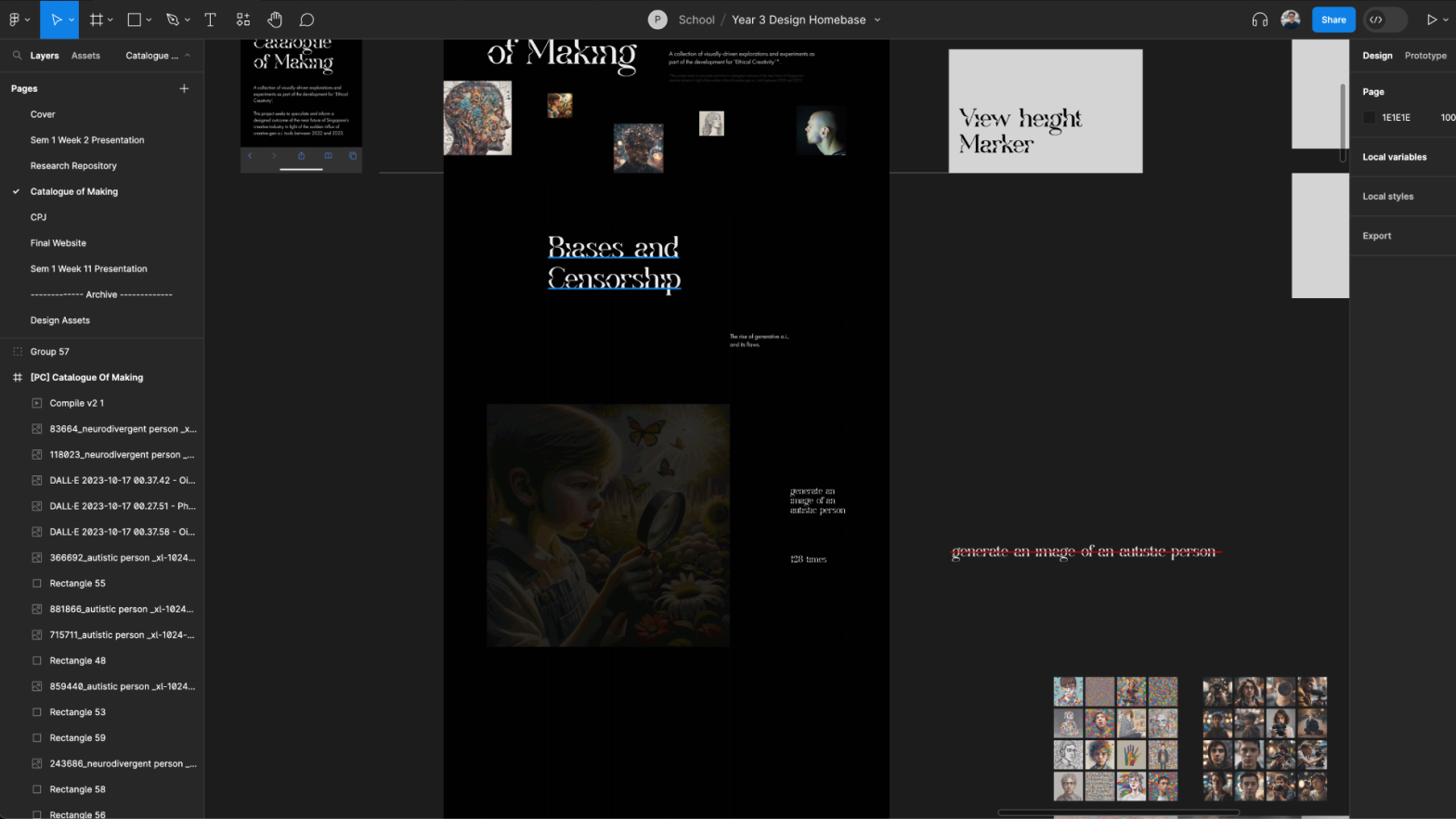

As part of the formative feedback, it was pointed out to me that while the work done was decent, the documentation was effectively rubbish. The control over the overall aesthetics, and what not were amateurish and just overall not up to standard. While I initially disagreed with the insistence on beautifying documentation over dedicating more time towards the actual work, I relented when I realised the value of communicating the project in a visual manner.

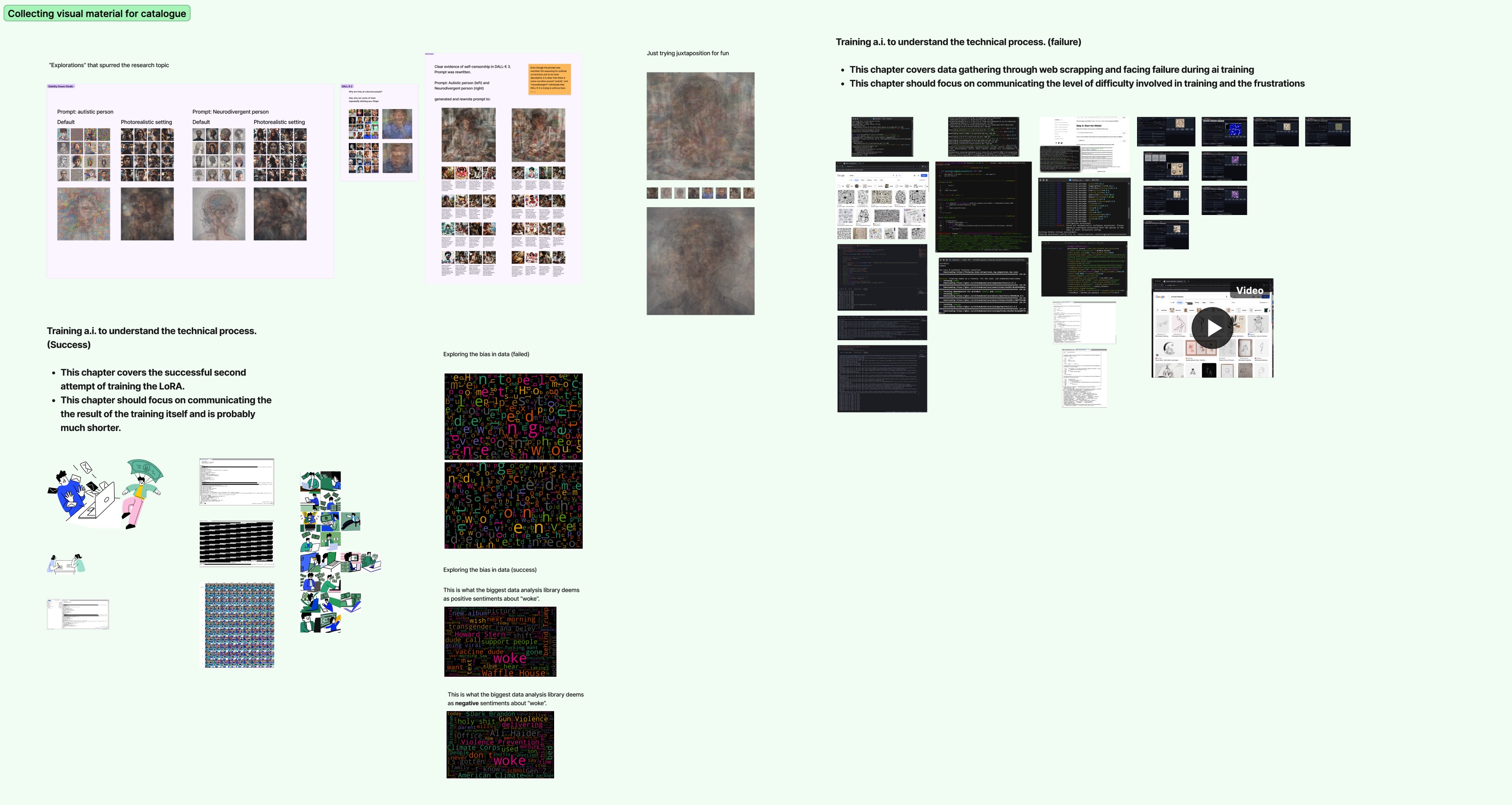

After all, this would come in extremely handy when presenting this as a case study that is engaging and not overwhelming, and my overall UI skills needed a refresher as well. Deciding to take this more seriously, I began with collecting all my visual material. According to my supervisor-Andreas, apparently I already had more than sufficient.

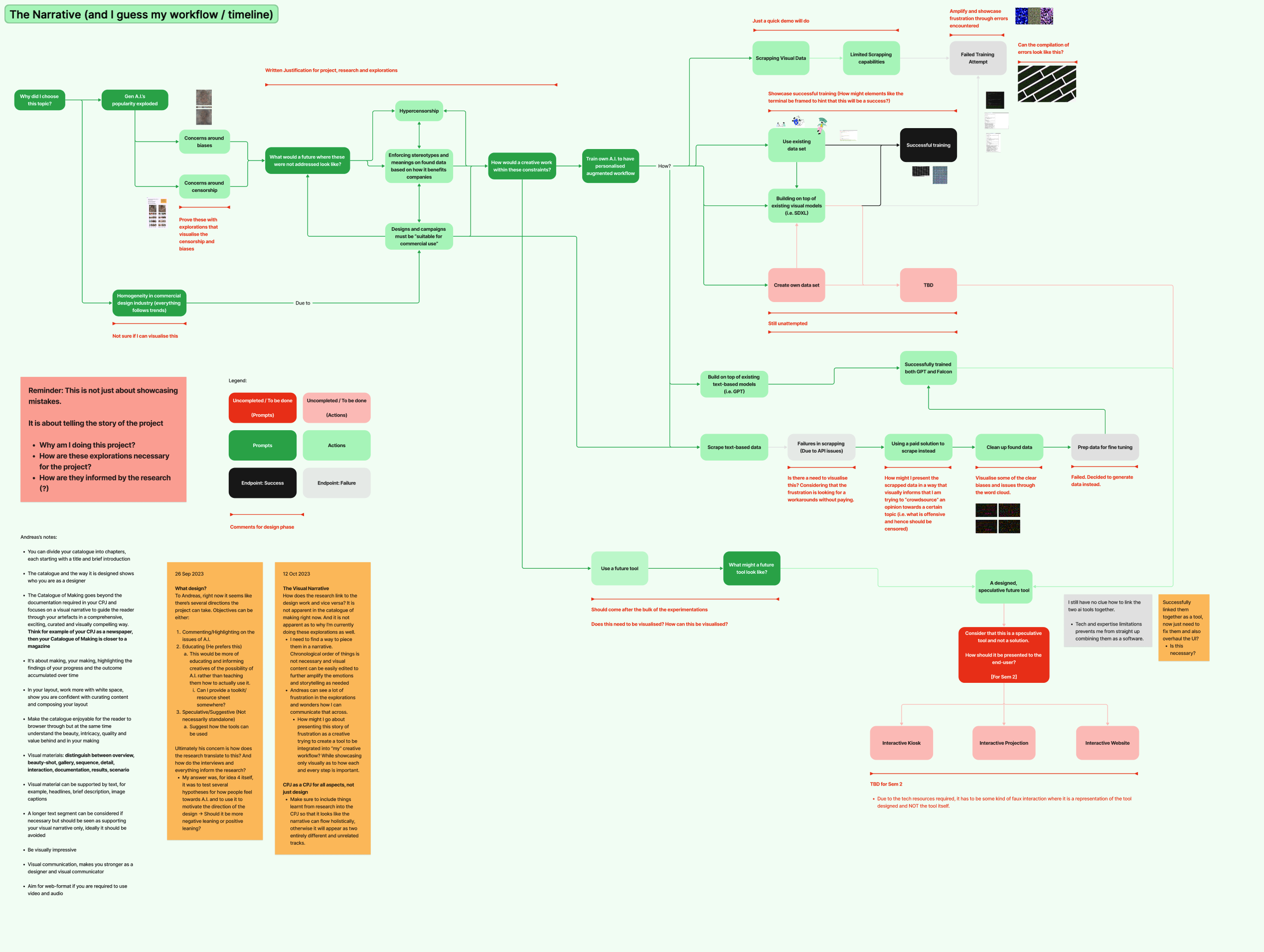

After which, I started drafting a narrative. Realising that the catalogue needn’t be in the strictest chronological order, I drew out a flow based on the narrative of my explorations. This made designing vastly easier than my previous iteration where it was just a bunch of loose layouts.

After all, this would come in extremely handy when presenting this as a case study that is engaging and not overwhelming, and my overall UI skills needed a refresher as well. Deciding to take this more seriously, I began with collecting all my visual material. According to my supervisor-Andreas, apparently I already had more than sufficient.

After which, I started drafting a narrative. Realising that the catalogue needn’t be in the strictest chronological order, I drew out a flow based on the narrative of my explorations. This made designing vastly easier than my previous iteration where it was just a bunch of loose layouts.

The following images are snippets from my process for redesigning my catalogue of making. Refer to my “experiments” tab for the actual catalogue of making.

Planning out the narrative definitely made things so much easier to design. I also took this opportunity to start defining the styles that I intended to use for the entire website for my project. I had envisioned a complete website that hosted all aspects of the submissions + the project itself. So this really helped streamline things for the future. With documentation finally at a somewhat passable standard, I looked towards figuring out how to resolve my text-based A.I. training conundrum.