SEMESTER 1 Week 3

Training My Own AI?

Diving into aI Training

Just as with webscraping, I began the training the AI model segment of this exploration with educating myself on how AI training actually works. I had to learn what a LoRA was, what the different types of visual-based A.I. training entailed, and just the overall process and requirements of AI training was like. And again, there were many libraries and plugins to install.

LoRA = A technique for fine-tuning deep learning models that works by reducing the number of trainable parameters and enables efficient task switching. Basically a cheaper way to train an A.I. with limited datasets.

Image of: One of the tutorials I followed to train my model.

To train a visual-based A.I. model, I would first have to download one of the models that was publicly available. From a quick search, it seemed that the Stable Diffusion models by Stability.ai where the most popular. I decided to go with the SDXL model, which was newly released and appeared to have more capabilities than its predecessor. Following which, I had to set up the Kohya_ss trainer which is basically a pre-written library and web interface for training the model so that I would not have to write the entire training code myself.

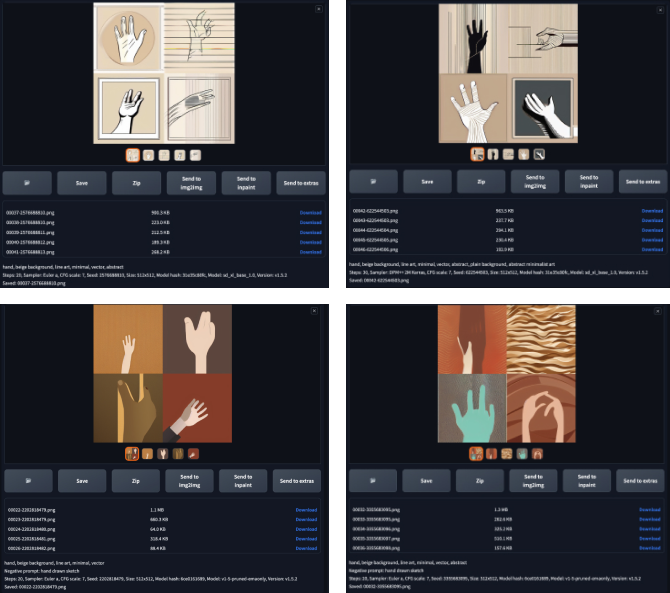

While waiting for the various packages to install for the Kohya_ss trainer, as I had already installed the base SDXL model, I tried my hand at prompting it with the same prompt I intended to use with the trained model. I wanted to have an early comparison of what the model was going to give me now, and contrast it against the outputs of my trained model.

While waiting for the various packages to install for the Kohya_ss trainer, as I had already installed the base SDXL model, I tried my hand at prompting it with the same prompt I intended to use with the trained model. I wanted to have an early comparison of what the model was going to give me now, and contrast it against the outputs of my trained model.

Image of: Testing the base model with the intended prompt

From my understanding, one of the key reasons to even finetune a model with a LoRA was so that you wouldn’t need to be so detailed in your prompts. You could just simply prompt it with the LoRA’s identifier, and that would be it.

I’m not sure what I was expecting from the generations from the base model, but they were definitely far from what I had envisioned the trained model to output. I left theses generated images and moved onto my next steps.

Following the tutorial, I went on to use the Kohya_ss trainer to tag my training images (which I had previously scraped in week 2). After finishing all the setup and inputing the required parameters, I was finally ready to train the model.

I’m not sure what I was expecting from the generations from the base model, but they were definitely far from what I had envisioned the trained model to output. I left theses generated images and moved onto my next steps.

Following the tutorial, I went on to use the Kohya_ss trainer to tag my training images (which I had previously scraped in week 2). After finishing all the setup and inputing the required parameters, I was finally ready to train the model.

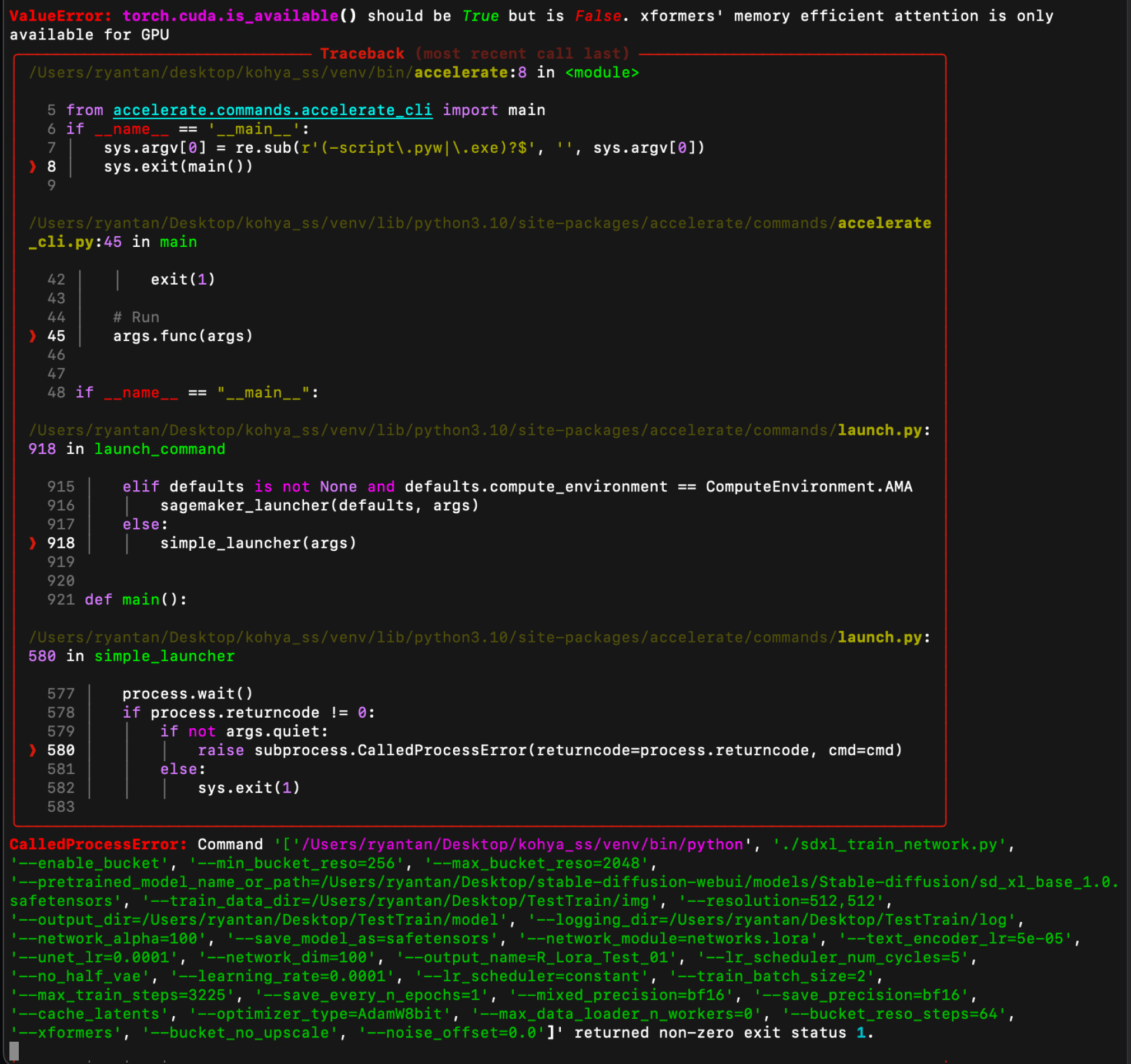

Image of: That one error that occurred repeatedly during LoRA training.

However my excitement quickly turned to frustration when I kept encountering the same error over and over and over again. I.e. Insufficient CUDA memories, etc, etc. As far as I could tell, this was simply because I was using a mac with an ARM chip, while the program required an Nvidia GPU with CUDA cores. I don’t think I can emphasise just how annoying this one error was with the image above.

No matter what I tried, changing the code, and all that, nothing was working. Honestly it’s really here that my lacking technical capabilities became very evident to me.There were some bandaid fixes online but it seemed like the best approach would be to just rent a cloud server with the necessary hardware instead. I decided to draw a line and move on with that instead.

No matter what I tried, changing the code, and all that, nothing was working. Honestly it’s really here that my lacking technical capabilities became very evident to me.There were some bandaid fixes online but it seemed like the best approach would be to just rent a cloud server with the necessary hardware instead. I decided to draw a line and move on with that instead.

Repeating The Steps

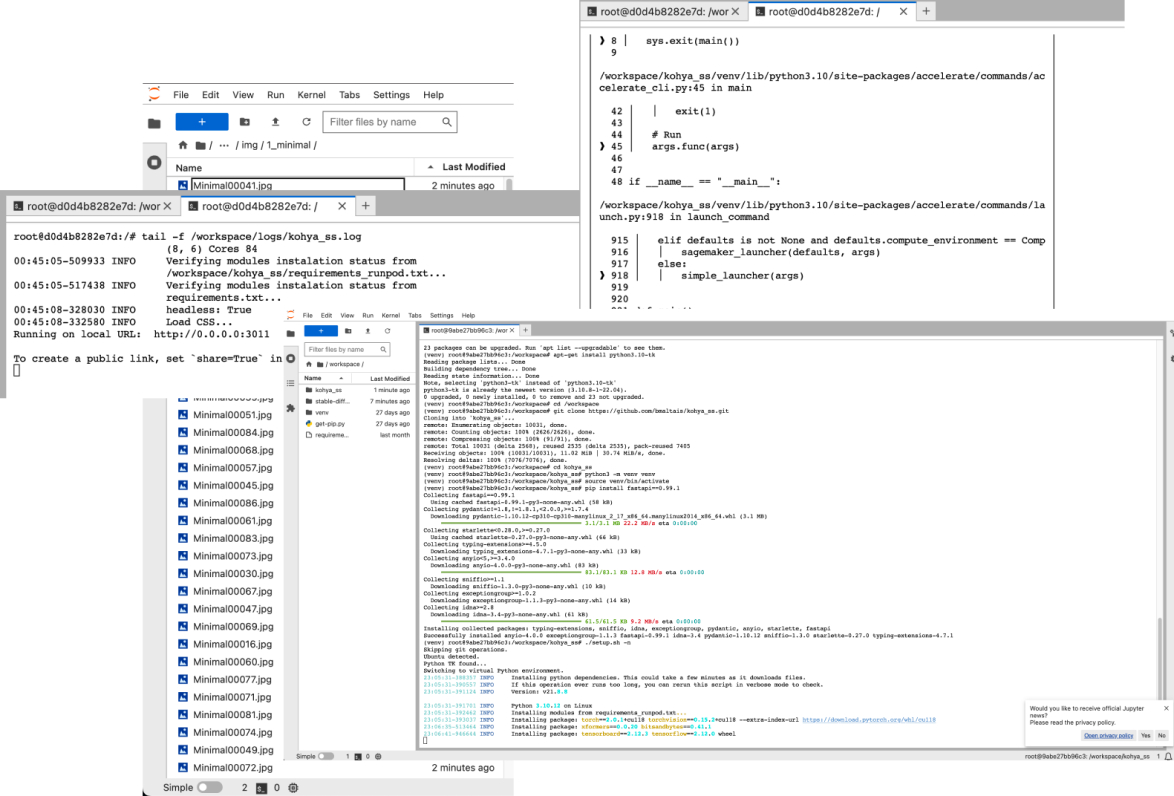

I chose a server provider that many tutorials seemed to recommend, RunPod. I then chose the server with the most recommended specs and had to basically repeat the entire installation process again, since this was effectively a clean computer.

Image of: Figuring out the cloud service provider.

One would have thought using a cloud server would have fixed things. But alas, no. I was once again bombarded with similar errors. Luckily, these were much simpler to resolve, and I was finally able to begin the training.

I fed the images to the trainer and let it run. Unbelievably, the training took over 2 hours, and this was a computer with quite substantial computing power. Through a quick self-reflection, I was quite relieved I didn’t use my own personal computer for this. I finally came to understand why in the A.I. race in the present day, the hardware manufacturer, Nvidia’s stock prices have skyrocketed so much.

I fed the images to the trainer and let it run. Unbelievably, the training took over 2 hours, and this was a computer with quite substantial computing power. Through a quick self-reflection, I was quite relieved I didn’t use my own personal computer for this. I finally came to understand why in the A.I. race in the present day, the hardware manufacturer, Nvidia’s stock prices have skyrocketed so much.

Image of: So many issues encountered with the cloud server.

With the model trained, it was time to start prompting! Or so I thought. Despite having followed the tutorials to a tee. It seemed there was some issues with the model or the computer. Images would begin to load, but the entire program would crash repeatedly with the same error message that even when following the suggested fixes, would do nothing for the error.

Image of: Attempting to generate using the trained LoRA, and instead generating pixelated noise.

Honestly it was really hard to troubleshoot with the little machine learning knowledge I had. After consulting with our supervisor-Andreas, it was decided that perhaps I should just end this exploration here. I learnt plenty about the process of training a generative AI, and considering that I have little intentions of simply just copying the work of others just to make yet another stylised generative AI model, it was a good stopping point.