SEMESTER 1 Week 4

AI Training Attempt No. 2

Trying again

After the failed training attempt in the previous week, I had a conversation with someone in the industry who was also dabbling with A.I. for similar product. This conversation reminded me that maybe instead of scrapping for images like what the other tools were doing, I could change my approach to use what I already had on hand–assets from the company I work for. After seeking permission to use the images, I got to work.

Image of: My dataset from the borrowed illustrations.

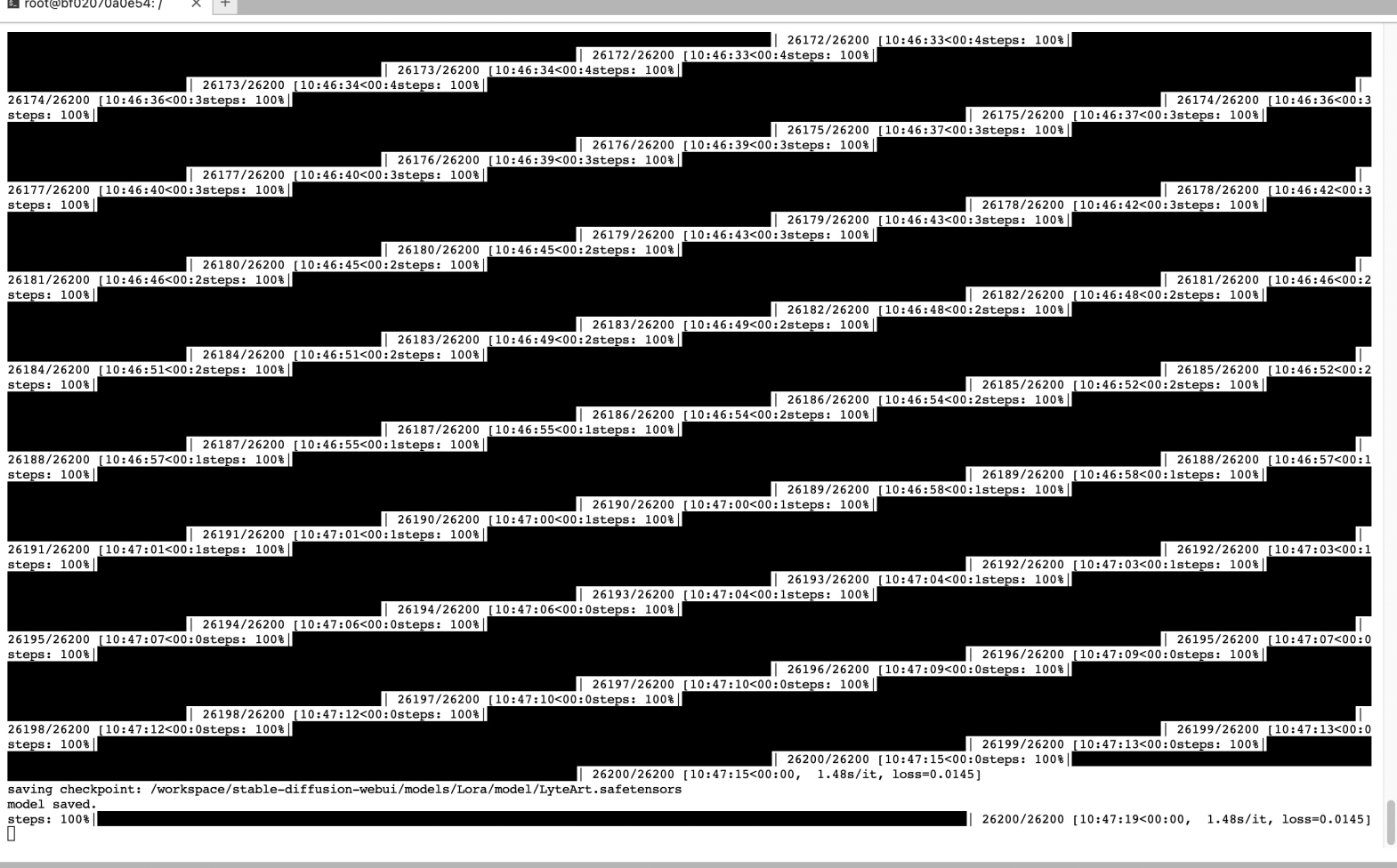

The process was mostly the same as the previous weeks. By now, I was quite familiar with the way it works and faced basically no errors. I was also more aware of what some of the settings in the training GUI did, like network score which controls how much the training should override the existing stable diffusion model. This time round, I made sure to dabble with some settings that should make the model adopt the style better.

Tinkering with the settings made the training time increase by 6x, from 2 hours previously to 12 hours. Of course it’s not comparable since there’s more images now plus a bunch of other variables were changed, but this was definitely still a shock.

Tinkering with the settings made the training time increase by 6x, from 2 hours previously to 12 hours. Of course it’s not comparable since there’s more images now plus a bunch of other variables were changed, but this was definitely still a shock.

Image of: The new LoRA being trained over time.

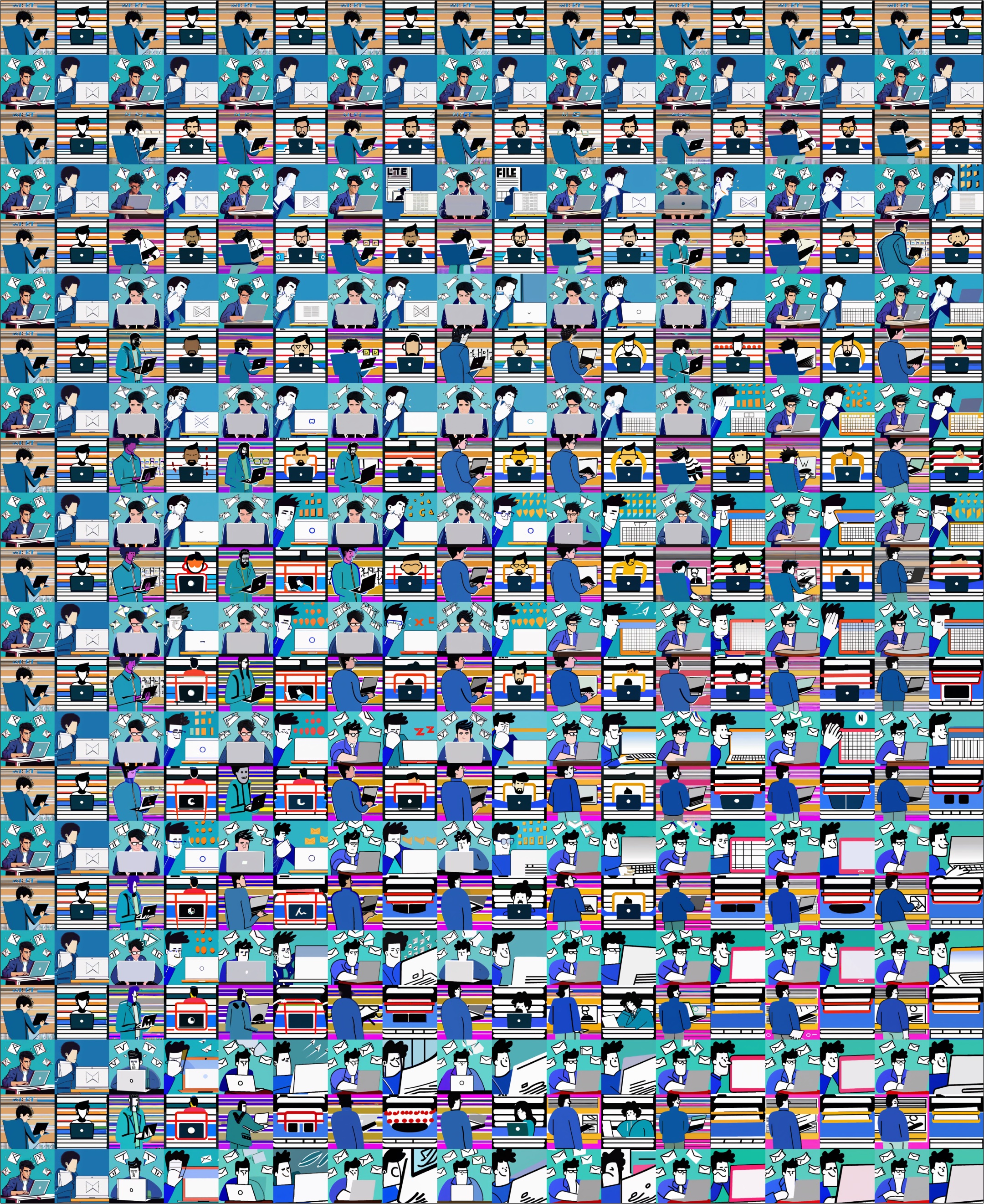

I ran a quick test to see the kinds of outputs that could be derived from the new model once the training was completed, and was extremely pleased to see that the model did manage to adopt the style to quite a significant extent. The panel below demonstrates this, with the bottom right being the most stylised.

Image of: Generating a sample grid to see what the LoRA is capable of.

At this point, I’m not so sure what I am going to do with this capability yet, but at the very least the model works well. I went on to use the model to generate some images with the same prompt several more times before calling it a day.

Looking back over the past three weeks that I’ve spent on this endeavour, I’ve really come to vaguely understand the steps that a creative might need to take to be able to train an A.I. for their use cases. During discussions both within and outside of school, I like to use the example of a concept artist who can train an A.I. to generate backgrounds for them so that they can focus only on the character concepts.

However, after my three weeks of toying around with the system and honestly, barely scratching the surface, that scenario still seems to be quite far. Just considering the effort put into this, I struggle to see why any non-A.I.-enthusiast will ever train their own model for their use cases instead of using one of the available tools like MidJourney and Dall-E. I guess that’s the reason as to why those tools have exploded with such popularity in the first place.

Looking back over the past three weeks that I’ve spent on this endeavour, I’ve really come to vaguely understand the steps that a creative might need to take to be able to train an A.I. for their use cases. During discussions both within and outside of school, I like to use the example of a concept artist who can train an A.I. to generate backgrounds for them so that they can focus only on the character concepts.

However, after my three weeks of toying around with the system and honestly, barely scratching the surface, that scenario still seems to be quite far. Just considering the effort put into this, I struggle to see why any non-A.I.-enthusiast will ever train their own model for their use cases instead of using one of the available tools like MidJourney and Dall-E. I guess that’s the reason as to why those tools have exploded with such popularity in the first place.